August 22, 2025

Imagine This as a Teenager…

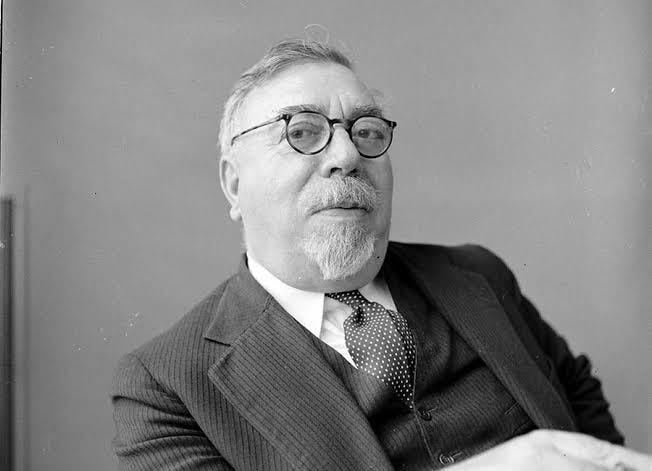

Norbert Wiener’s father (Leo) originally went to medical school in Warsaw, Poland before emigrating to the United States. While he ultimately became disillusioned with medicine, Leo had a studious approach to life. The family moved to the US in 1880, settling in New Orleans originally. A few years later, he became a schoolteacher in Kansas City before moving up to the University of Missouri. There, he became a professor in the Modern Languages department — more about my journey.

He believed his son could master languages and logic in equal measure, and it shaped Norbert’s astonishing early life. Norbert was the only child of Leo Wiener, a polymath and Harvard Professor of Slavic languages, and Bertha Kahn, who also came from a family of intellectuals.

By age 10, Norbert wrote philosophical essays. At 11, he entered Tufts College (now University), and by 14, he had graduated. At 18, he earned a PhD in mathematical logic from Harvard, having already studied under Bertrand Russell at Cambridge and with David Hilbert at Göttingen. As a teenager, he spent time defining ordered pairs in set theory. It set him apart from even the brightest of his peers (then and now).

But genius often comes with social difficulties. Despite his brilliance and academic accolades, Harvard got rid of Wiener as a faculty member. Some argue the decision came from the era’s anti-Semitic barriers and academic conservatism, though the precise reasons remain unconfirmed.

Overcoming obstacles often leads to fruitful paths, and it did for Wiener. It led him to MIT in 1919, where he spent over four decades building a legacy that would ripple through science, engineering, and philosophy forever. It made him a pioneer of AI as we know it.

Separate Signal From Noise

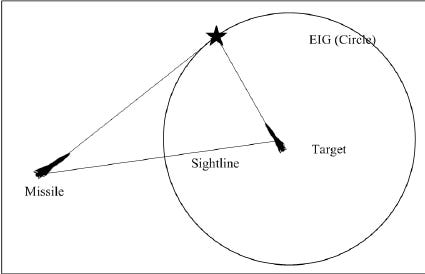

World War II marked a turning point in Wiener’s career. Recruited to MIT’s Radiation Laboratory, he worked on anti-aircraft radar systems. His work revolved around creating anti-aircraft devices, not a simple task. Compared with traditional systems, airplanes traveled quickly and erratically. Shooting down a moving target requires the system to lead the target, and the speed and seemingly random movements made it difficult.

It created an engineering challenge that has continued to develop into autonomous systems (like AI) that we know today. His systems would automatically aim at an enemy aircraft based on information it received from a radar ping. It would then calculate a firing position, accounting for the speed of the aircraft, distance, and speed of the ammunition. Then, it would loop the process based on the next radar ping, automatically improving the aim each time. It was a continuous feedback loop and directly led to automated guidance and control systems.

It’s not such a difficult task if planes moved in a straight line. But pilots knew they were targets, and they weren’t going to make shooting them down easy. The erratic movements generated wildly different positions from one ping to the next. Lots of noise, and Norbert had to dig out the signal.

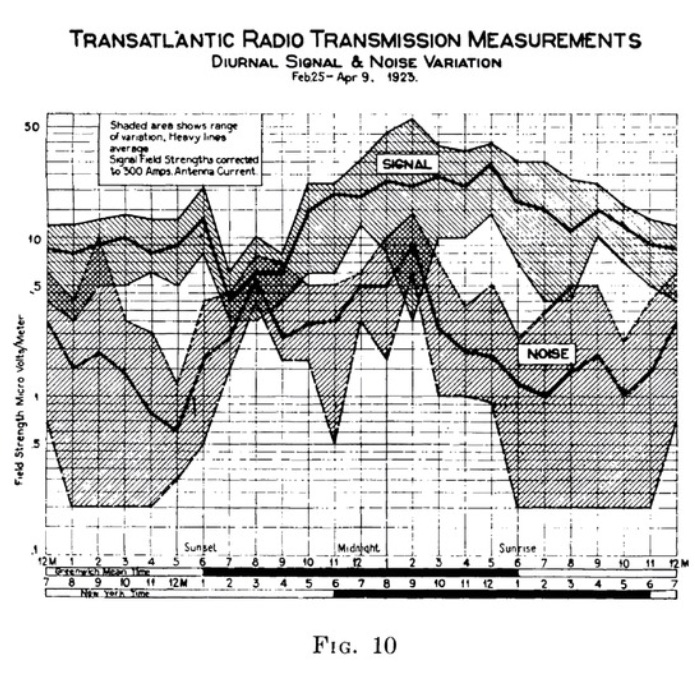

The term signal from noise goes back further than Norbert, back to the 1920s in fact, when engineers measured signal and noise in transatlantic radio transmissions.

Norbert’s work formalized the concept and took it from measurements to mathematics, which allowed for predictions.

Some definitions may help here:

Noise: random errors or inaccuracies, in radar that could mean static, interference, or fluctuations that do not represent the actual location of the aircraft.

Instead of simply taking the newest information from the radar and aiming based on the reading, he took information from the prior pings as well. Each reading didn’t get the same weight, though. His system prioritized some readings over others. The most recent information didn’t always carry the most weight. Here are three examples:

- If a plane flew in a straight line, then it has minimal noise. The latest information is likely the best and weighted that way.

- With predictable motions, like a zig-zag pattern for example, the earlier signals may help quite a bit (and maybe more than the most current reading).

- When the most recent readings came with more noise, it would give more weight to prior (less noisy) readings.

But how could the system determine what was noise and what was signal?

Wiener’s answer became the Wiener Filter, a mathematical method for estimating the true signal from noisy data. It assumed all data had both signal and noise. All of it. He developed. Given a noisy measurement, the Wiener Filter could estimate the true signal.

The system used assumed patterns or prior knowledge to predict the signal. It assumed the signal is more predictable and correlated to itself than the noise. When it received an actual reading, it compared it to the prediction. Then, using Wiener-Hopf equations, the Wiener filter minimized the average square difference between the expectation and the actual reading.

Essentially, it did not seek to separate the signal and noise in each individual reading. It worked by using many readings over time to separate the two. Philosophically, it’s a powerful reminder that you cannot separate signal and noise unless you actively make predictions about what you think will happen and measure it against what actually happens. It requires active participation and feedback.

The filter’s impact is hard to overstate. It remains a backbone of modern signal processing. It aids in speech enhancement by improving speech signals from background noise in hearing aids, noise canceling earphones, or in your video conferencing.

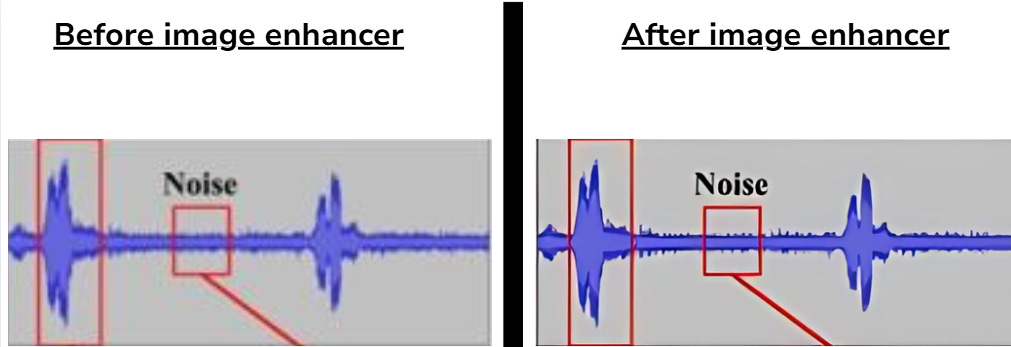

Image Enhancing

The filter also helps clarify images. X-rays, CT scans, and MRI, for example use the filter to remove graininess and visual anomalies so doctors get clearer medical photographs and can better diagnose patients. Everyday consumers use the technology to sharpen images so their content looks better.

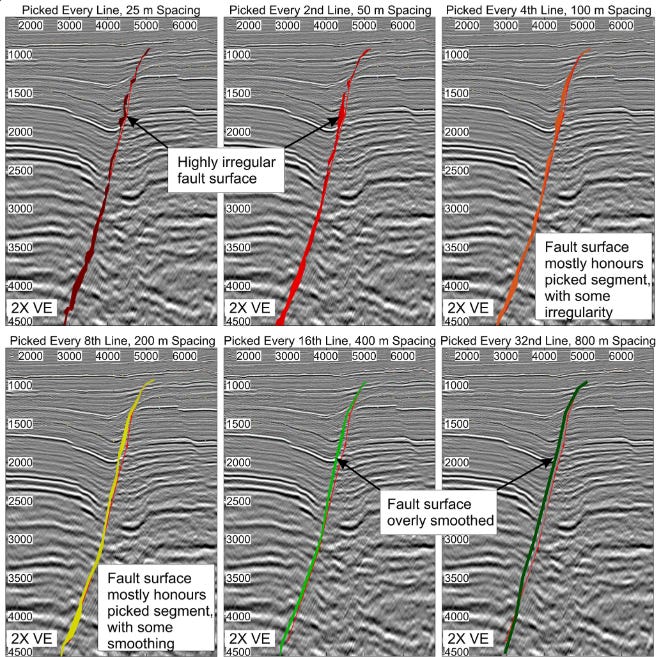

Geology

Geologists use the technology to enhance seismic readings. It’s important in industries dependent on finding oil, gas, or mineral deposits because it helps differentiate between different geological strata. They can also use it to improve earthquake detection, measure changes in the Earth’s inner layers over time.

Communication

We see the biggest impact on our modern lives, though, in how it helps communication technology. Our phone calls rarely have static now, data travels over the internet and across wireless networks with less interference, radio and television broadcasts don’t have the fuzziness they did decades ago, and our devices will accurately tell us where we are while traveling 90 mph down the highway using GPS because Wiener filters remove distortions caused by the atmosphere.

Order from Chaos

At MIT, Wiener became a legend. Students recalled his poetic lectures on Brownian motion, which is the seemingly random movements you see in particles (like dust) floating in the air. They seem random because they constantly bump tiny particles like dust or pollen, which you cannot see with the naked eye, and move them side to side like pinballs getting bounced between bumpers. Einstein explained the phenomenon in 1905, and it provided evidence of the existence of atoms.

Then Wiener got his hands on it and took Einstein’s efforts and built a rigorous mathematical framework for Brownian motion. It’s now known as the Wiener Process, and is the cornerstone of probability theory and stochastic processes.

By introducing rigorous mathematics, it allowed a number of industries to start using it to make predictions. It’s the basis of the Black-Scholes option pricing model, which helps traders estimate the value of options and other derivatives. Myron Scholes and Robert Merton won the Nobel Prize in 1997 for their work on the model, directly benefiting from Norbert’s work.

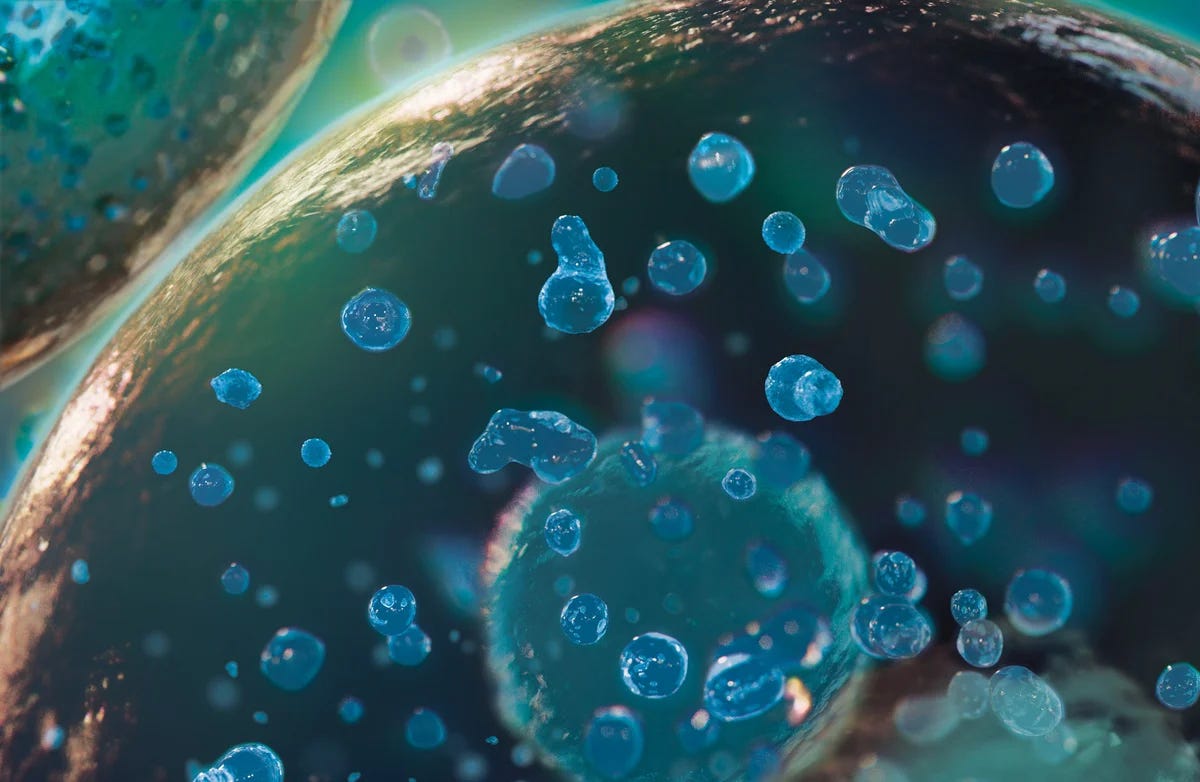

Biology’s Stochastic Pulse

Biology leans on it to understand everything from how molecules travel inside of cells to evolution. Molecules such as protein, oxygen, RNA, and others move randomly through cells, so the Wiener process makes sense of it and helps improve drug efficacy, understand heart function and muscle disease, and is improving our understanding of Alzheimer’s and Parkinson’s disease.

Conservation Efforts

Going from microbiology to evolutionary biology, the process helps to make sense of large genetic movement in species over time. This helps to protect endangered species, provide feedback on the value of conservation efforts from one environment to the next, and predict how populations will respond to environmental changes, disease, and habitat loss. For example, researchers use Wiener filtering to analyze GPS data of African elephants to understand their movements in response to human threats, water and vegetation sources, and features in the landscape.

Astrophysics: Decoding Cosmic Chaos

Astrophysicists use it all the time. They use it to enhance blurry photos retrieved from telescope imaging. The Big Bang left a faint glow (called Cosmic Microwave Background…or CMB for short), and the Wiener filter helps separate CMB signals from the noisy instruments in order to better understand the early universe and test our current theories.

The universe is big, and we study things millions of light years away. The Wiener filter helps scientists time-stamp events like flashes or pulses from stars outside of our galaxy.

It even helps with things we cannot really see, like gravitational waves. They produce tiny, faint, and noisy ripples in space-time. The Wiener filter reduces the noise and allows us to understand physics we cannot replicate on Earth.

Essentially, it helps experts wrangle with randomness in nearly every field you can imagine.

Cybernetics: Steering Chaos into Order

In 1948, Wiener published Cybernetics: Or Control and Communication in the Animal and the Machine, coining the term “cybernetics.” It comes from the Greek kybernetes, meaning “steersman”. The concept was simple yet profound: systems (biological, mechanical, or social) govern themselves through feedback. It uses both the Wiener process and the filter to work.

The Wiener process models uncertainty in a system. The filter works to minimize the noise in service to getting as pure a signal as possible. When they work together, the system gives itself continuous feedback control. Now it can predict, estimate, and correct outputs in environments with lots of noise.

The human brain works this way. We constantly predict how things will happen and compare those predictions to the constant feedback we get from our environment. Norbert put math to it, bridging biological functions with computational models. He demonstrated machines could mimic biological learning and directly inspired neural networks and AI technologies.

As we move into the robotic age, we are seeing control systems and cybernetics operate in extremely noisy environments. Think about self-driving cars, autonomous drones, factory robots, smart appliances, and advanced medical devices. It all started with Norbert’s cybernetics.

Cyber(fill in the blank) is everywhere now, but the idea exists in businesses outside of tech, too. The idea that “feedback is destiny” applies to challenges in business, climate, and technology. Feedback loops can amplify both positive and negative outcomes.

Engineers used it for climate control (like your smart thermostat), autonomous anything, and now AI. Social media uses it for algorithms that adapt to user behavior, so some things go viral and you see them over and over again. It affects our buying behavior, habits, and social trends.

Norbert Sounded the Alarm in 1947 and 1964

As Wiener dove deeper into his work, he could foresee moral issues with autonomous machines. He wrote, “The automatic machine is the precise economic equivalent of slave labor. Any labor which competes with slave labor must accept the economic consequences of slave labor.” His warnings about the social and ethical implications of technology were decades ahead of their time. In 1947, The Atlantic published his essay “A Scientist Rebels”, in which he refused to accept military contracts, rejecting the Cold War model of science as state weaponry.

Then, in 1965, he won the National Book Award in Science, Philosophy, and Religion for his book God & Golem, Inc. (1964). It’s one of the earliest, and most urgent, discussions on tech ethics. It built on ideas he published 15 years before in The Human Use of Human Beings, which still gets cited in debated about AI ethics, algorithmic bias, and the future of work. He warned of a future where automation could strip human life of meaning and called on leaders to steward technology responsibly.

Wiener’s insights are more relevant than ever. As society grapples with the rise of AI, algorithmic governance, and automation, his questions about responsibility, feedback, and the human meaning of work remain urgent.

You might also enjoy: Claude Shannon.

The memes are new today, but Norbert was talking about all of this 90 years ago.

Shaun Gordon scaled a company to 1,000 employees, built and sold two businesses, and now acquires companies through Astria Elevate in Dallas. Whether you’re building, buying, or thinking about what’s next — he’s always happy to talk.