September 8, 2025

Anthropic just raised a monster $13 Billion Series F funding round at an estimated valuation of $183 Billion (as of September 2, 2025). The company has taken in billions in other funding from Amazon, Google, Fidelity, and others to develop a product named Claude, an AI model. They named it after Claude Shannon, who put his fingerprints all over every AI we have, on top of the entire information technology industry. We can still see those fingerprints today — more about my journey.

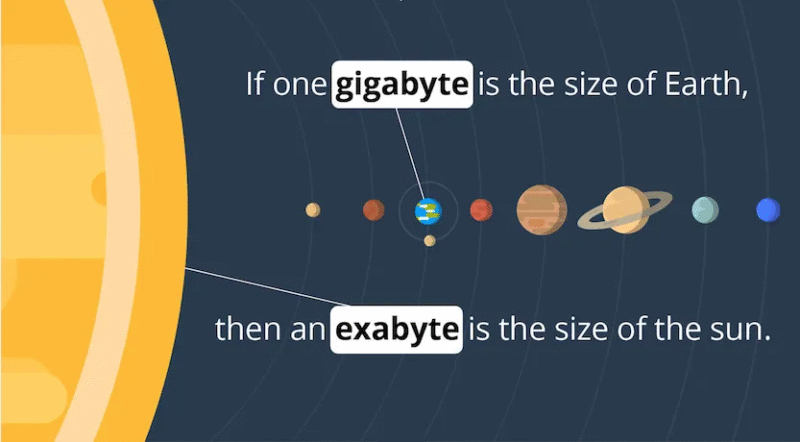

It’s no small thing, either. Modern data infrastructure shuttles over 120 zettabytes of data annually. If you’re not sure what a zettabyte is, here’s some context: Every person on Earth would need about 58 iPhones to store that much data. I get enough spam calls on the one phone I have, thank you.

But how did we go from storing no digital data to so much of it?

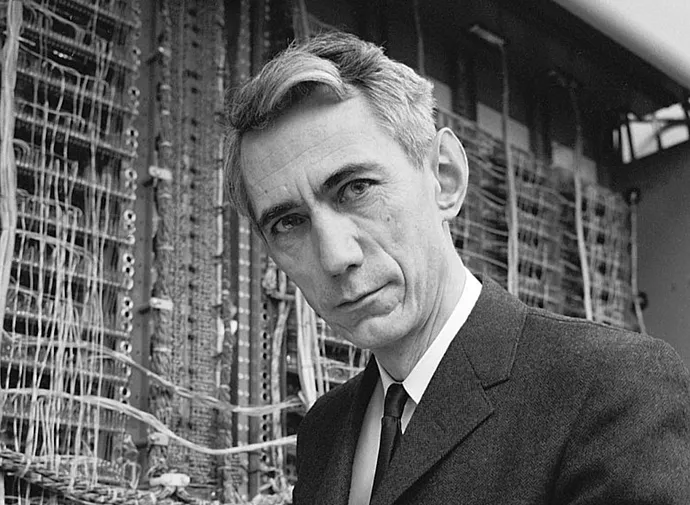

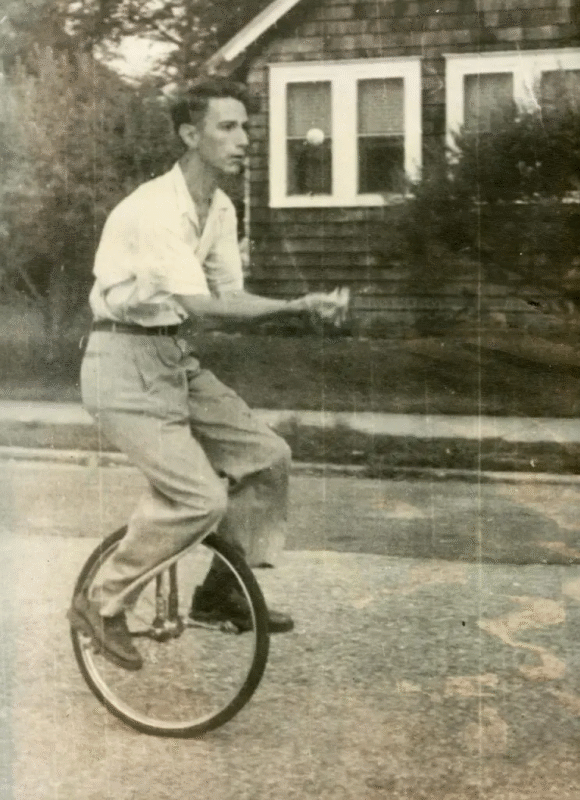

In 1948, while the world obsessed over atomic power and Cold War politics, Claude created the building blocks of today’s tech industry: bits, bandwidth, and noise (for example). If you met him in the narrow halls of Bell Labs back then, you probably wouldn’t have figured him for a public intellectual. You likely would’ve found him riding a unicycle and juggling, a hobby he continued throughout his life, eventually joining the MIT juggling team when he became a professor near the end of his career.

SIDE NOTE: Speaking of doing amazing things while juggling, check out this kid who’s solving 3 Rubik’s cube while juggling!

He certainly preferred it to conferences and media interviews, both of which he shunned. Yet, we wouldn’t have the technology we enjoy today without his work. The historian James Gleick called his seminal paper, “A Mathematical Theory of Communication,” more profound than the transistor:

“An invention even more profound and more fundamental [than the transistor] came in a monograph spread across seventy-nine pages of The Bell System Technical Journal in July and October. No one bothered with a press release. It carried a title both simple and grand — “A Mathematical Theory of Communication” — and the message was hard to summarize. ”

“The Magna Carta of the Information Age”

Scientific American went farther than Gleick, naming it the “Magna Carta of the Information Age.” Claude changed the future of technology forever when he showed that information (whether music, video, text, or speech) can get chopped up into tiny pieces called bits. He proved people could measure and compress all kinds of messages so they use less space. Then they can send it reliably, even if the line is noisy, as long as they respect some hard limits. This helped all digital technology, from cell phones to the Internet, work as well as it does today.

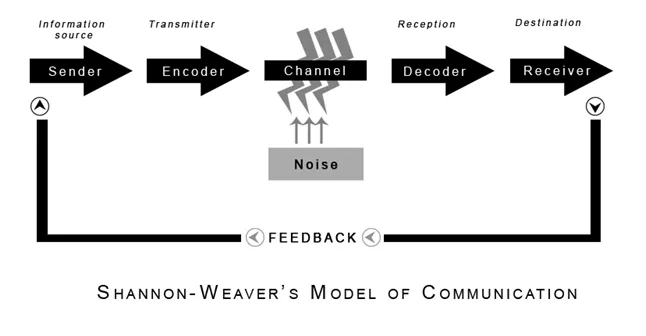

His 1948 work introduced his 5-part model of communication, breaking the process of communication into:

- Source: The origin of the message (a person or a machine).

- Transmitter (Encoder): Converts the message into signals (like a phone turning voice to electrical signals).

- Channel: The pathway the signal travels (such as a wire, airwaves, or fiber optics).

- Receiver (Decoder): Converts signals back into a message (like a phone turning signals into sound).

- Destination: The intended recipient (another person, device, or system).

He realized noise disrupts the signal along the channel, so he introduced ideas like error-correcting codes to protect the accuracy of messages.

Born to Tinker

As a boy, Claude tinkered with radios, model planes, and telegraphs. At the University of Michigan, he paired mathematics with electrical engineering, a rare combination at the time. After he graduated from Michigan, he went to Boston to study with Vannevar Bush at MIT. They worked on the differential analyzer, a room-sized mechanical computer.

The machine used rods, gears, shafts, spinning discs, and metal wheels to solve differential equations (problems involving motion or how things change over time). It physically modeled the answers to an equation and output curves with a pen.

Take the harmonic equation, for example. The harmonic motion equation tells us how something moves back and forth in a repeating way, like a swing, bouncing ball, or weight on a spring. Bush’s machine would use a spinning parts and rods to represent acceleration, velocity, and the position of the object (the swing, ball, or weight). When the operators got the machine set up just right and turned it on, each part would mimic its part of the equation, affecting each other and outputting a sine wave (in this case).

The machine worked well for solving differential equations, but it had a huge impact in the real world. The US Navy and War Department adopted it for ballistics research. Engineers used it to model electrical grids. Geologists used it to study vibrations of the earth. It also helped to solve problems related to flight and aerodynamics.

Claude saw other possibilities with the electromagnetic relays and switches, realizing they could perform Boolean algebra. Boolean algebra only uses two values, 0 and 1, and Claude could set the switches up to turn on and off accordingly. That insight became his 1937 master’s thesis, praised as “the most important master’s thesis of the 20th century”.

What did his thesis do?

In the 1930s, Boolean algebra served as the foundation of telephone switching circuits, electrical switches, or systems relying on relays. Claude’s use of the differential analyzer optimized telephone systems, but it had a bigger impact on the digital world. It paved the way for digital calculators and computing machines. Engineers used it to upgrade factories and military equipment, using electro-mechanical controls to build machines capable of making logical decisions based on inputs.

He gave engineers a way to turn problems of wires and switches into math problems they could solve on paper. Today, every computer works this way: inside every computer are billions of simple switches, and Claude’s work is the reason we know how to arrange them to get useful results.

Claude reimagined established models and equations, stripping problems to their essentials and building new mathematical worlds. Mentors saw him as an applied mathematician as well as an engineer. He pinpointed his approach to problem solving as “determining the core of a problem and removing details that could be reinserted later.”

It’s impressive Claude could get to the core of problems so effectively, but he also had an uncanny ability to choose the right problems, which brings me to another one of his great quotes, “I am very seldom interested in applications. I am more interested in the elegance of a problem. Is it a good problem, an interesting problem?” I’d say he sure picked an interesting problem in moving information from one place to another.

SIDE NOTE #2: Claude had a ton of great quotes. His biographer put down 104 of them in this article. It’s worth the time to look through them.

Turning Noise into Knowledge

In the 1940s, communication technology was analog and fragile. Long-distance calls degraded across copper lines. Radio carried voices but couldn’t handle data. It’s a far cry from the capacity we have today: by 2027, global mobile data traffic will exceed 330 exabytes per month (about 66 billion HD movies).

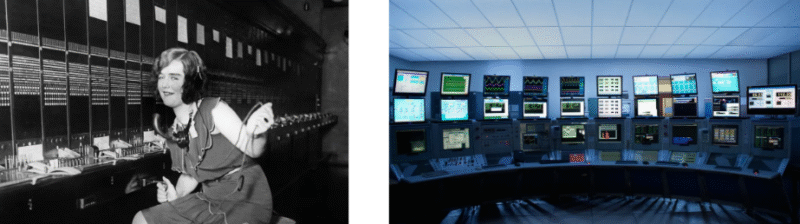

Operators back then focused on reliability: Could a voice call make it coast-to-coast intact? Could wartime codes withstand interception? Claude reframed these as one problem: How to move information through noisy channels. From this came error-correcting codes and digital compression.

This is when Claude’s realization that you could treat voices, photos, or any data as just “information” comes into play. What mattered most wasn’t the type of message, but how much uncertainty (or surprise) each message carried. He invented the “bit,” a single yes/no, 1/0 choice, as the smallest unit of information. He also developed equations that defined exactly how much data a noisy channel could carry without errors.

Error-correcting codes added redundancy, allowing operators to recover original data if noise had degraded it. Compression removed redundancy to save space. Together, these tools made digital communication robust and efficient. Bell Labs quickly put Claude’s theories to work.

At Bell Labs during WWII, Claude applied these abstractions to high-stakes projects: secure military telephony, transoceanic message transmission, and radar. Allied generals tasked him with designing secure phones and creating radar countermeasures against enemy electronic warfare. All of the sudden, Claude’s academic exercises transformed into urgent wartime needs.

When asked about the pressure, Claude famously said, “I do my best work when I’m not under pressure. I just play with things.” That playfulness solved problems at scale.

The Shannon Limit

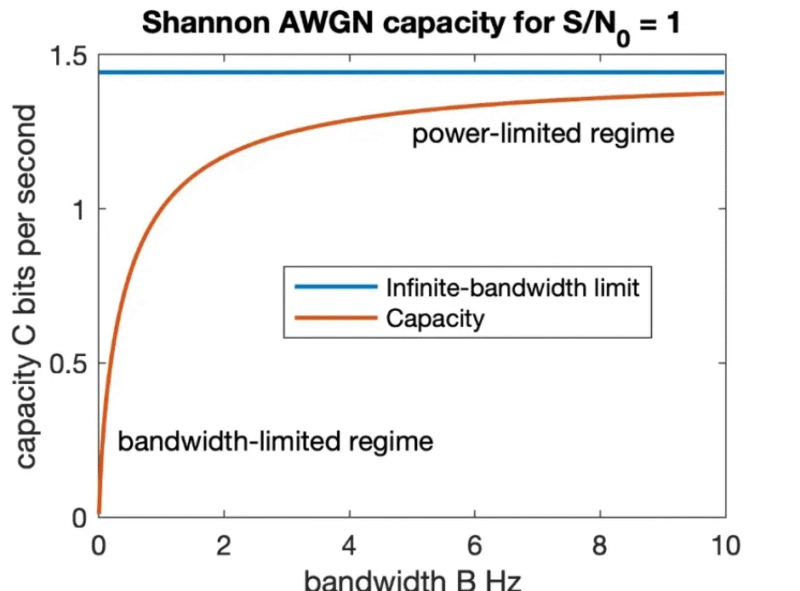

Claude’s 1948 paper posited something else anyone building communication technology infrastructure now considers: the Shannon Limit. Every channel has a maximum capacity for reliable communication, and his equation shows the ultimate boundary for a channel, accounting for bandwidth and noise.

NASA’s JPL lab used the Shannon Limit on Voyager 1 and 2 so they could get data from these satellites across more than 14 billion miles. They can’t do anything about the physics of noisy signals traveling so far, but they changed how they packaged the information in order to stay within the channel’s capacity. Stay under the limit, and you can essentially send as much data as you want without errors. Now we have high-quality pictures of Jupiter’s storm bands, Saturn’s rings, Neptune’s blue hue, and more.

When you try to push too much, however, you will get errors. Guaranteed. Think about these examples from your life: You get garbled audio or dropped calls when the capacity of your cell phone drops to one bar. Maybe you’ve noticed the internet connection runs slowly at the coffee shop on the corner that people use as an office because it stretches the WIFI too far.

Here’s the formula:

C=B⋅log2(1+NS)

C = Channel capacity (in bits per second)

B = Bandwidth (in Hz)

S/N = Signal-to-Noise ratio

Here’s the important takeaway from the formula: noise will always set the ceiling for speed and quality, no matter how much bandwidth you have or how strong you make the signal. Engineers still depend on this limit today. Whether they are trying to squeeze out more Netflix streams or laying fiber-optic cables under the sea, they will ask, “How close are we to Shannon?”

Even if someone could send an incredibly strong signal over a channel with immense bandwidth, they would run into a limit: Power. Here’s what the Shannon Limit looks like on a graph, and you can see the infinite-bandwidth limit in blue. Everything above the orange curve is impossible to achieve.

Laypeople generally ignored it, but experts understood the value in his work. They started recognizing his work with awards. Claude’s playful spirit extended to his treatment of those accolades. He regarded symbols of fame as trinkets, never the point of his work. In fact, he used to display honorary degrees in his study by hanging them on a motorized tie rack at home, spinning them like trophies of amusement rather than status.

From Mice to Machines

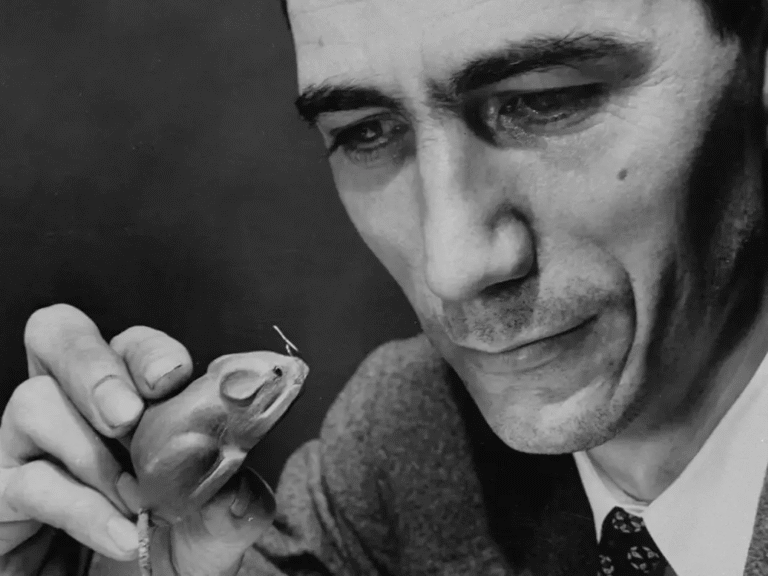

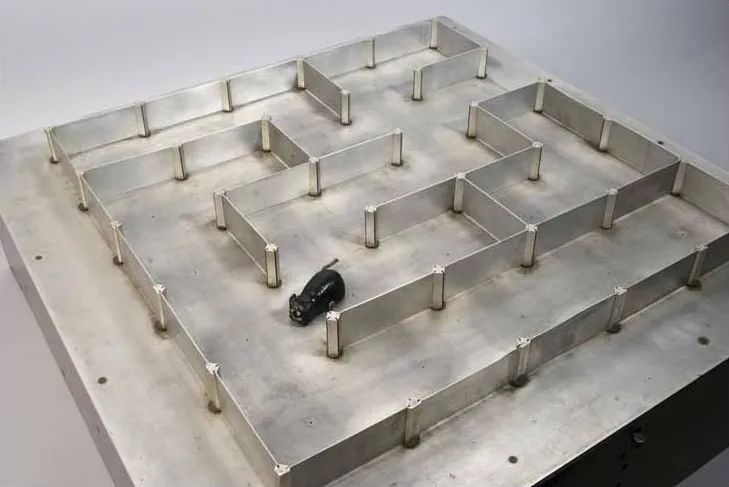

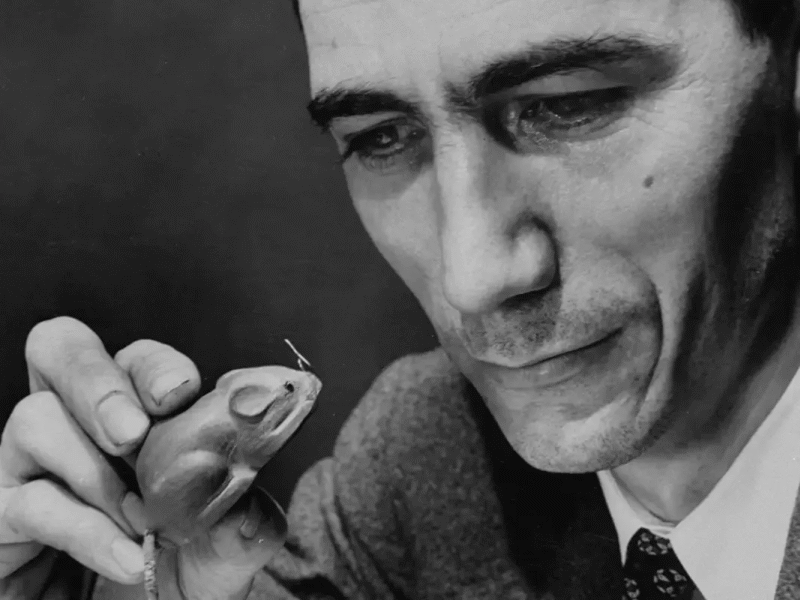

Claude’s playful spirit showed up in machines, as well as math. In the late 1940s, he built Theseus, a mechanical mouse that could solve mazes. It’s widely cited as one of the first artificial learning devices and a direct ancestor of modern robotics and AI.

As MIT roboticist Rodney Brooks later put it, Claude’s maze-solving mouse was “a foreshadowing of artificial intelligence (long before anyone was using the term).” And as computer scientist Marvin Minsky observed, Claude’s blend of math and play “made him the spiritual father of both AI and modern computing.”

Anthropic agrees. They named their large language model, Claude, after him.

His theories underpin satellites, QR codes, and video streaming. He co-developed pulse-code modulation (PCM) with Bernard M. Oliver and John R. Pierce at Bell Labs, allowing analog signals to be stored and transmitted digitally. Every phone call and every digital audio file depends on it.

Critics once dismissed his ideas as too idealized. Others worried AT&T’s control over Bell Labs made his breakthroughs monopolistic. But as fiber optics, satellites, and digital switching spread, Claude’s abstractions became the common language of information exchange.

The Genius Who Got Bored

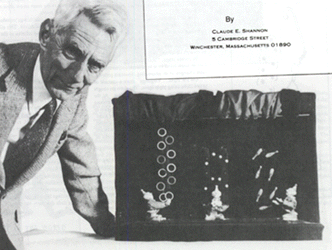

Top Left to bottom: The gambling computer | The Little Juggling Clowns. From left to right: Ignatov, Rastelli, and Virgoaga. | Claude’s juggling machine in action | W.C. Fields, Claude’s machine could do a bounce-juggle.

By the 1960s, Claude had built an entire field, but he grew restless. He returned to MIT as a professor, slowed his formal research, and turned to tinkering. Among his inventions:

- A flame-throwing trumpet

- A rocket-powered frisbee

- Juggling robots

- A wearable gambling computer

The wearable computers have an interesting tie to modern times. He and Edward Throp collaborated with each other to create a computer to predict the results at a roulette wheel and to count cards at the blackjack table. They were able to create a success rate of 44% during an exhibition at Vegas casinos.

He never lost his passion for juggling. He taught the skill, built dioramas to illustrate its math, and shared it with friends and family. Claude always cared about play (whether juggling, building quirky machines, or spinning equations into new worlds) above everything else. That playful spirit turned into the architecture of the digital age. Every text, call, or streamed movie carries the mark of a man who thought information was just another puzzle worth solving.

Sometimes titles like “the father of [insert big thing]” are more generous than accurate. For Claude, it might not be big enough. His work cracked open a language underpinning every text message you send to your friends, every photo in your phone, and the largest AI models we have.

in the year 2000, the University of Munich’s Center for Applied Policy Research wrote:

“Ones and zeros shape our lives today as surely as DNA does; the institutions of our societies could as little function without digital information as our bodies could function without oxygen.”

You might also enjoy: the forgotten pioneer of AI.

Twenty-five years later, they may not have gone far enough either. It’s fitting Claude’s name dons the flagship product of a company with a twelve-figure valuation. As Anthropic’s AI model grows, hopefully more people will learn about this amazing man. Although, it seems like he wouldn’t have cared either way. As the character playing his wife put it in this video clip, “Claude [didn’t] overly worry about how he’ll be remembered.”

Shaun Gordon scaled a company to 1,000 employees, built and sold two businesses, and now acquires companies through Astria Elevate in Dallas. Whether you’re building, buying, or thinking about what’s next — he’s always happy to talk.